BigARTM: The Algorithm Under The Hood¶

ToDo: link BigARTM to online batch PLSA algorithm.

ToDo: explain the notation in the algorithm.

ToDo: update the algortihm with regularization.

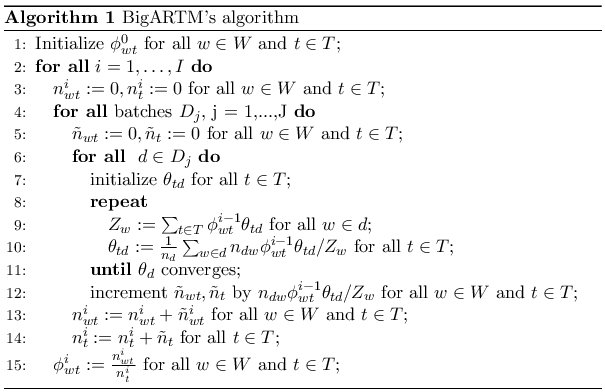

In this algorithm most CPU resources are consumed on steps 8-11 to infer topic distribution for each document. This operation can be executed concurrently across documents or batches. In BigARTM this parallelization is done across batches to avoid splitting the work into too small junks.

Processing each batch produces counters $tilde n_{wt}$ and $tilde n_{t}$, which should be then merged with the corresponding counters coming from other batches. Since this information is produced by multiple concurrent threads the merging process should be thread-safe and properly synchronised. Our solution is to store all counters $tilde n_{wt}$ and $tilde n_{t}$ into a single queue, from where they can be picked up by a single merger thread. This thread will then accumulate the counters without any locking.

Further in this text the term outer iteration loop stands for the loop at the step 2, and the term emph{inner iteration loop} stands for the loop at step 8. Instead of “repeat until it converges” criteria current implementation uses a fixed number of iterations, which is configured manually by the user.

Step 15 is incorporated into all steps that require $phi_{wt}$ (e.g. into steps 9, 10 and 11). These steps utilize counters from the previous iteration ($n^{i-1}_wt$ and $n^{i-1}_t$), which are no longer updated by the merger thread, hence they represent read-only data and can be accessed from multiple threads without any synchronization. At the same time the merger thread will accumulate counters for $n^i_{wt}$ and $n^i_t$ for the current iteration, again in a lock-free manner.